Authors:

Preetha Vijayan,

Prashant Bhat,

Bahram Zonooz,

Elahe Arani

Problem Statement:

- Existing continual learning methods—parameter isolation, weight regularization, rehearsal—are orthogonal and each has limitations: capacity saturation, class-discrimination failure, buffer overfitting.

- No unified framework leverages their complementary strengths.

Research Goals:

- Design a unified CL paradigm combining multiple biological and algorithmic mechanisms to mitigate forgetting and promote forward transfer.

- Desired Outcomes:

- Preserve performance on past tasks (stability)

- Maintain plasticity for new tasks

- Reuse freed capacity to accommodate many tasks without growing the model

- Outperform individual CL approaches on standard benchmarks

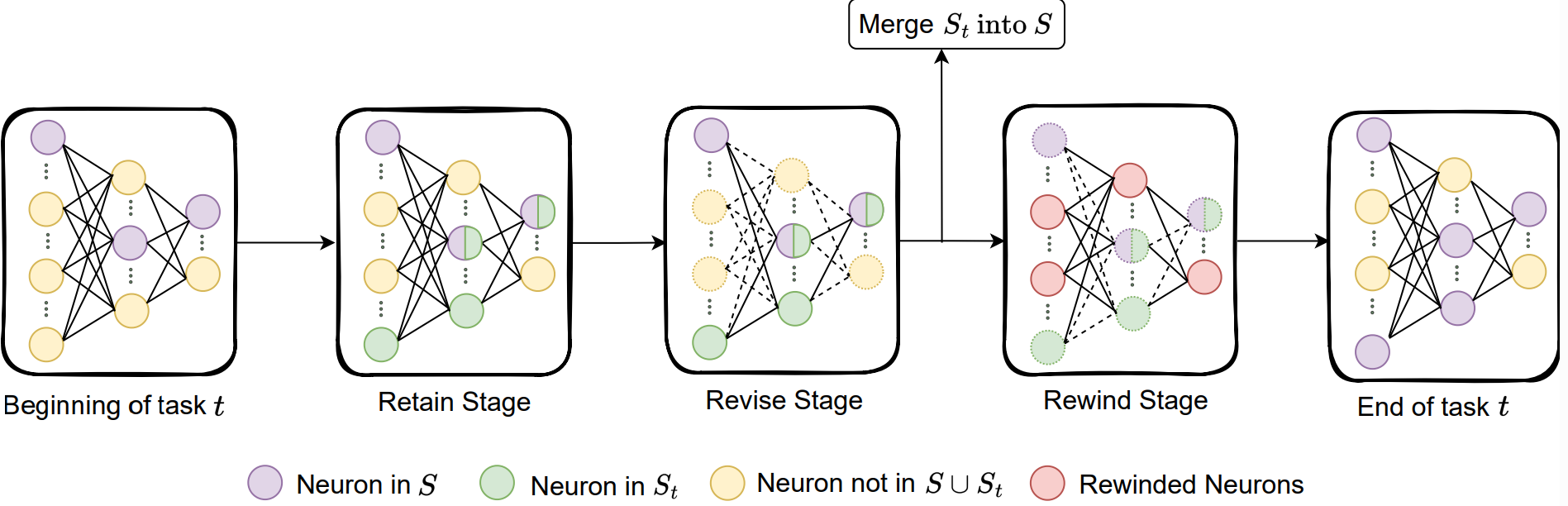

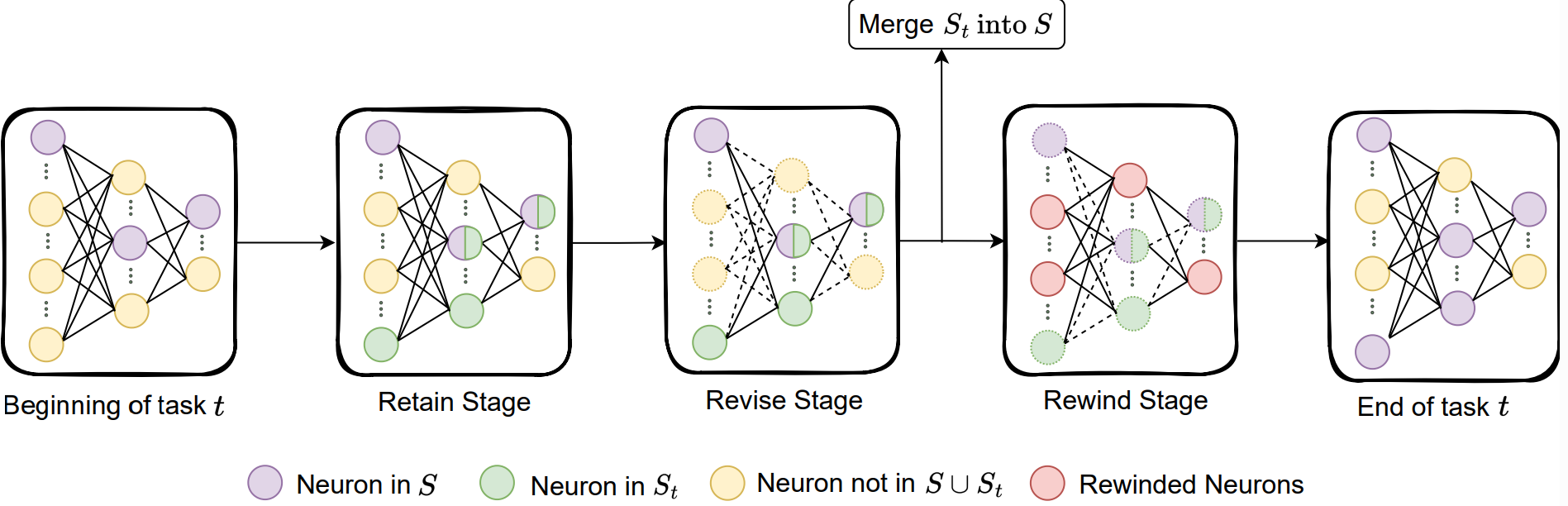

Proposed Approach: TriRE (REtain, REvise & REwind)

Retain

- For each new task, Retain updates connections not used previously with new task data, while connections shared with previous tasks are updated using replay data under EMA (Exponential Moving Average) regularization.

A subnetwork for a given task is identified and saved using CWI (Continual Weight Importance).

Revise

- Revise focuses on precise weight updates: it applies small updates using replay data to weights that are common to both current and previous tasks, while weights used only in the current task receive updates from the current task's data.

Rewind

- Reset non-cumulative weights to a saved checkpoint from Retain (70–90% through training).

- Retrain on current data to reactivate underused neurons (active forgetting + neurogenesis).

Experimental Setup:

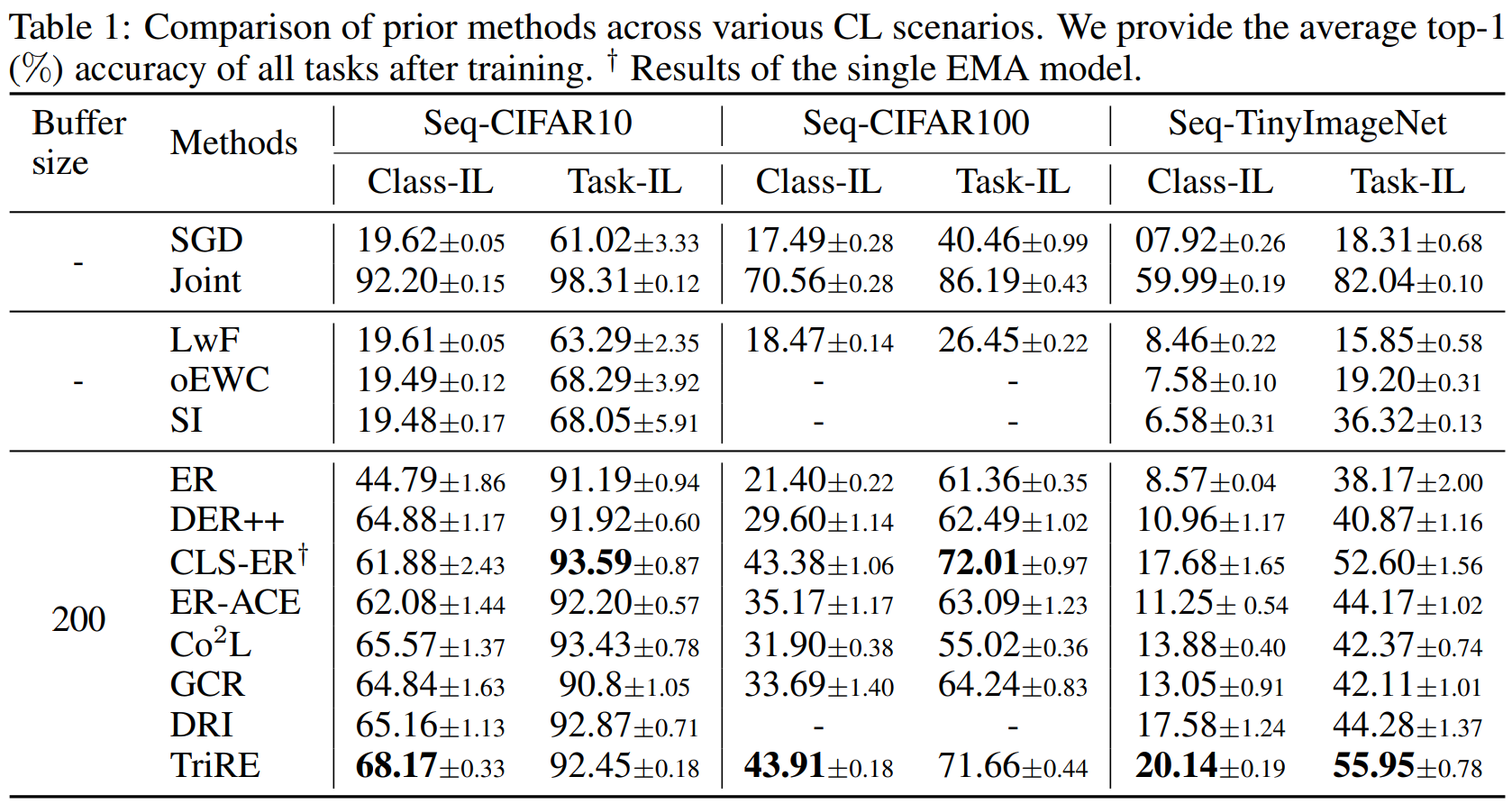

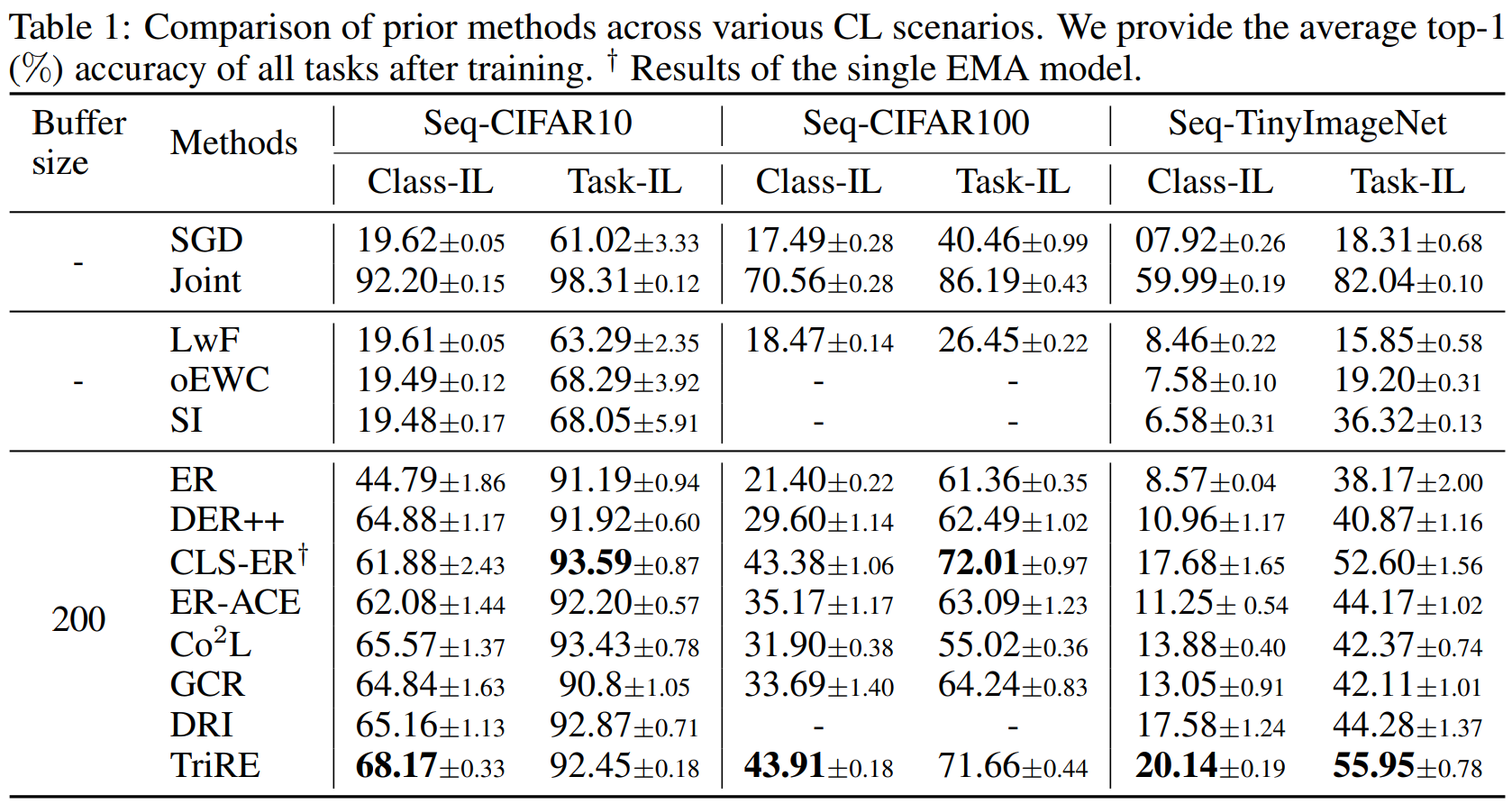

- Datasets: Seq‑CIFAR10 (5×2‑class), Seq‑CIFAR100 (5×20‑class), Seq‑TinyImageNet (10×20‑class).

- Backbone: ResNet‑18, batch size 32, 50 epochs per task, Adam optimizer.

Results:

Limitations:

- Increased memory overhead (EMA model, masks, checkpointed weights).

- Complex hyperparameter tuning.

- Unclear test-time protocol—are multiple masks used?

Strengths:

- First paper to harmonize parameter isolation, weight regularization, and rehearsal in a biologically inspired loop.

- Clear, accessible writing and presentation.But I would suggest to check the pseudocode carefully, there you will see much clear details.

Future Work:

- Leverage intrinsic data/task structure for more efficient subnet selection.