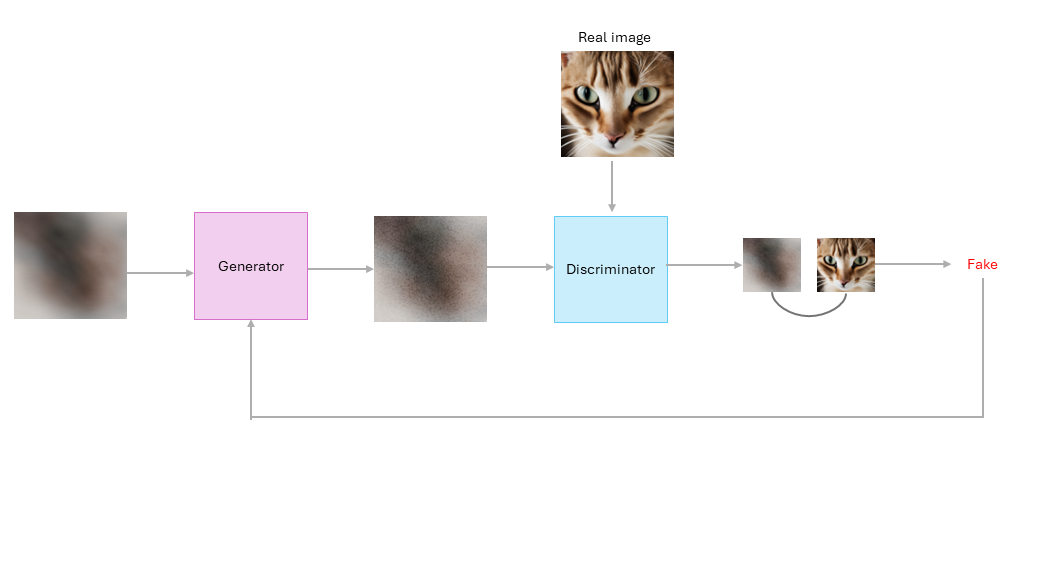

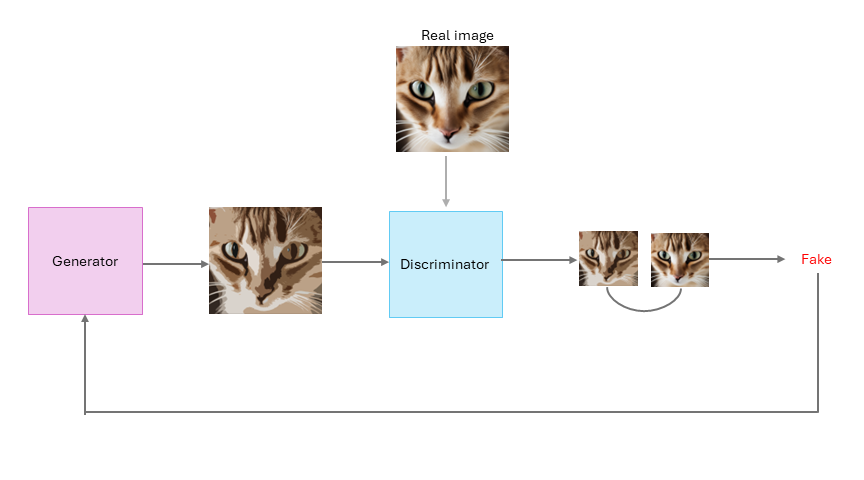

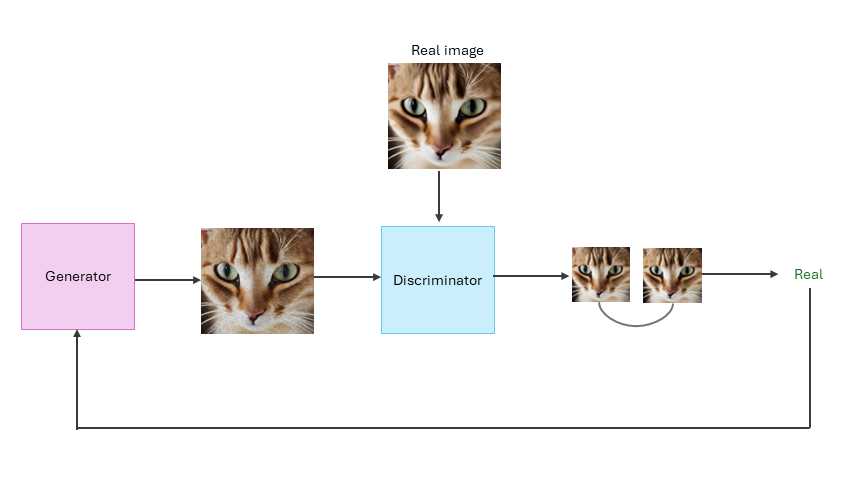

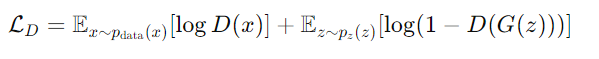

The core idea behind GANs is simple: use two neural networks to compete against each other in a zero-sum game, driving each other to improve until the generated data is indistinguishable from real data.

There are 2 key players in this game: The Generator: and the Discriminator:.

The Generator:

The Generator’s job is to create fake data that resembles the real data.It starts with a random noise vector z and transforms it through a series of layers into a data sample G(z). Initially, the samples generated are poor imitations, but as training progresses, the Generator gets better at creating more realistic data.

The Discriminator acts as a detective, distinguishing between real and fake data samples.It receives both real data samples x and fake data samples from the Generator G(z) and tries to distinguish between the two. The Discriminator outputs a probability D(x) that the input data is real. During training, the Discriminator gets better at identifying fake data, which in turn pushes the Generator to produce more convincing fakes.

The Training Process

- Initialize: Both the Generator and Discriminator are initialized with random weights.

- Generate fake data:The Generator creates a batch of fake data samples from random noise.

- Train the Discriminator:The Discriminator is trained on a batch of real data samples and the fake data samples from the Generator. It learns to assign higher probabilities to real samples and lower probabilities to fake ones.

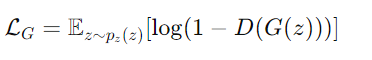

- Train the Generator:The Generator is trained to fool the Discriminator. This is done by updating the Generator’s weights to maximize the probability that the Discriminator assigns a high probability to the fake samples.

- Iterate:Steps 2-4 are repeated iteratively. Over time, the Generator becomes better at creating realistic data, and the Discriminator becomes better at distinguishing real from fake.

Various Proposed GAN Styles

GANs come in various styles, each with its own characteristics and applications:

DCGAN (Deep Convolutional GANs)

It is a type of Generative Adversarial Network (GAN) that uses deep convolutional neural networks in its architecture.

Key Features of DCGAN

- Convolutional Layers: DCGANs use convolutional layers without any fully connected or pooling layers, which helps in capturing spatial hierarchies in images.

- Batch Normalization: This technique is used in both the generator and discriminator networks to stabilize training by normalizing the inputs of each layer.

- Strided Convolutions and Fractional-Strided Convolutions: These replace pooling layers to down-sample and up-sample the data, respectively.

WGAN (Wasserstein Generative Adversarial Network)

It is an improved version of the traditional GAN that addresses some of the stability and training issues associated with GANs.

GANs are powerful but often difficult to train due to issues such as mode collapse, vanishing gradients, and instability. WGANs were introduced to provide more stable and meaningful training by using a different loss function based on the Wasserstein distance (also known as the Earth Mover's distance) instead of the Jensen-Shannon divergence used in traditional GANs.

Key Concepts in WGAN

- Wasserstein Distance: The Wasserstein distance measures the distance between two probability distributions. It is more stable and provides meaningful gradients even when the generated data is far from the real data distribution.

- Critic Network: Instead of a discriminator, WGAN uses a critic network. The critic scores the real and generated data instead of classifying them as real or fake. The critic's output is used to approximate the Wasserstein distance between the real and generated data distributions.

Conditional GAN

It is an extension of the standard GAN model that includes conditional information in the generation process. This additional information could be any kind of auxiliary information such as class labels, text descriptions, or other data that can influence the generation process.

Key Concepts in Conditional GAN

The key difference between a standard GAN and a cGAN is that both the generator and discriminator receive extra information about the data as a condition.

Generator:

The generator in a cGAN takes a noise vector z and a condition c as inputs. The condition c could be a class label or some other form of additional information.

The generator outputs a data sample G(z,c) that aims to be indistinguishable from real data conditioned on c.

Example: Let's say you want to generate images of digits conditioned on their labels (0-9). Here's how cGAN would be set up:

- Condition Vector: If the condition is the digit label, you can represent it as a one-hot encoded vector. For instance, if the digit is '3', the condition vector could be [0,0,0,1,0,0,0,0,0,0].

- Generator Input: The noise vector z and the condition vector c are concatenated and fed into the generator to produce an image of the specified digit.

- Discriminator Input: The generated or real image and the condition vector are both fed into the discriminator to determine if the image is real and if it matches the condition.